hello, i dont know why stata keeps me showing invalid syntax in this loop:

foreach x of p524a1_14 p524a1_15 p524a1_16 p524a1_17 p524a1_18{

summ `x'

gen ING_`x' = (`x'-r(mean))/r(sd)

}

i tried with an space just before the "{" and every way but i cant do it

please send your suggestion

thank you so much

Specialized on Data processing, Data management Implementation plan, Data Collection tools - electronic and paper base, Data cleaning specifications, Data extraction, Data transformation, Data load, Analytical Datasets, and Data analysis. BJ Data Tech Solutions teaches on design and developing Electronic Data Collection Tools using CSPro, and STATA commands for data manipulation. Setting up Data Management systems using modern data technologies such as Relational Databases, C#, PHP and Android.

Thursday, September 30, 2021

Survival Analysis - different outputs

Hello everyone,

I am trying to do survival analysis on 2018 Nigeria Demographic and Health Survey Data.

The data has 33,924 observations, but after I expanded it to capture every month a child lived before death or censoring it got to 934,141 observations.

From what I understand from texts, 'sts list' and 'ltable t dead, noadjust' commands should give the same output.

But I'm getting different outputs:

'sts list' used 33,924 as its beginning total and at the end of the 60-month period, there are 88.28 percent children still alive, which looks about right. While,

'ltable t dead, noadjust' used 934,141 as its beginning total and at the end of the 60-month period, there are 99.39 children still alive.

Please, I want to understand what could be wrong, since both outputs should be the same.

What did I do wrong please?

Below is the dataex output

Thank you for your help.

I am trying to do survival analysis on 2018 Nigeria Demographic and Health Survey Data.

The data has 33,924 observations, but after I expanded it to capture every month a child lived before death or censoring it got to 934,141 observations.

From what I understand from texts, 'sts list' and 'ltable t dead, noadjust' commands should give the same output.

But I'm getting different outputs:

'sts list' used 33,924 as its beginning total and at the end of the 60-month period, there are 88.28 percent children still alive, which looks about right. While,

'ltable t dead, noadjust' used 934,141 as its beginning total and at the end of the 60-month period, there are 99.39 children still alive.

Please, I want to understand what could be wrong, since both outputs should be the same.

What did I do wrong please?

Below is the dataex output

Code:

* Example generated by -dataex-. To install: ssc install dataex clear input float(t pid study_time) byte died float dead 1 1 13 0 0 2 1 13 0 0 3 1 13 0 0 4 1 13 0 0 5 1 13 0 0 6 1 13 0 0 7 1 13 0 0 8 1 13 0 0 9 1 13 0 0 10 1 13 0 0 11 1 13 0 0 12 1 13 0 0 13 1 13 0 0 1 2 9 0 0 2 2 9 0 0 3 2 9 0 0 4 2 9 0 0 5 2 9 0 0 6 2 9 0 0 7 2 9 0 0 8 2 9 0 0 9 2 9 0 0 1 3 17 0 0 2 3 17 0 0 3 3 17 0 0 4 3 17 0 0 5 3 17 0 0 6 3 17 0 0 7 3 17 0 0 8 3 17 0 0 9 3 17 0 0 10 3 17 0 0 11 3 17 0 0 12 3 17 0 0 13 3 17 0 0 14 3 17 0 0 15 3 17 0 0 16 3 17 0 0 17 3 17 0 0 1 4 31 0 0 2 4 31 0 0 3 4 31 0 0 4 4 31 0 0 5 4 31 0 0 6 4 31 0 0 7 4 31 0 0 8 4 31 0 0 9 4 31 0 0 10 4 31 0 0 11 4 31 0 0 12 4 31 0 0 13 4 31 0 0 14 4 31 0 0 15 4 31 0 0 16 4 31 0 0 17 4 31 0 0 18 4 31 0 0 19 4 31 0 0 20 4 31 0 0 21 4 31 0 0 22 4 31 0 0 23 4 31 0 0 24 4 31 0 0 25 4 31 0 0 26 4 31 0 0 27 4 31 0 0 28 4 31 0 0 29 4 31 0 0 30 4 31 0 0 31 4 31 0 0 1 5 39 0 0 2 5 39 0 0 3 5 39 0 0 4 5 39 0 0 5 5 39 0 0 6 5 39 0 0 7 5 39 0 0 8 5 39 0 0 9 5 39 0 0 10 5 39 0 0 11 5 39 0 0 12 5 39 0 0 13 5 39 0 0 14 5 39 0 0 15 5 39 0 0 16 5 39 0 0 17 5 39 0 0 18 5 39 0 0 19 5 39 0 0 20 5 39 0 0 21 5 39 0 0 22 5 39 0 0 23 5 39 0 0 24 5 39 0 0 25 5 39 0 0 26 5 39 0 0 27 5 39 0 0 28 5 39 0 0 29 5 39 0 0 30 5 39 0 0 end

Thank you for your help.

Arranging bars of graph bar

Hello community. I am producing a graph with Stata. Here is the output :

Array

And here is the code

I would rather like to put the bars of the same color side-by-side. So three groups (Av. var1 for the two groups of Catvar), (Av. var2 for the two groups of Catvar), and (Av. var3 for the two groups of Catvar). This is possible in Excel for example. Is it possible with Stata ? Any help is welcome.

Best.

Array

And here is the code

Code:

graph bar var1 var2 var3 [aw=weight], /// over(Catvar) /// blabel(bar,format(%9.2f)) /// legend(label(1 "Av. var1") label(2 "Av. var2") /// label(3 "Avg 3")) yla(5(5)25,nogrid) graphregion(color(white))

Best.

Reshape numerous variables

I have a vector of numerous variables (>100) that I need to reshape. Basically the format of the variables are the following.

Country GDP Population v1_2000 v1_2001 v1_2002 .. v1_2020 v2_2000 v2_2001 v2_2002 ... v2_2020 .... v50_2000 v50_2001 ... v50_2020

For the simplicity I generated data as suggested.

Then of course, here I have only two variables (v1_, v2_), so I can simply do

But in my actual data, I have v1_, v2_, ...... v_100.

I tried to define

and etc, but so far nothing works.

How can we reshape such large list of variables v1_, ..... v100_ ?

Country GDP Population v1_2000 v1_2001 v1_2002 .. v1_2020 v2_2000 v2_2001 v2_2002 ... v2_2020 .... v50_2000 v50_2001 ... v50_2020

For the simplicity I generated data as suggested.

Code:

clear

input str72 country str24 units float(v1_2000 v1_2001 v1_2002 v1_2003) double(v2_2000 v2_2001 v2_2002 v2_2003)

"Austria" "Percent change" 2.048 -3.613 1.329 1.708 1.806 1.999 2.086 2.197

"Austria" "Percent of potential GDP" -1.804 -2.958 -4.279 -4.092 . . . .

"Belgium" "Percent change" .832 -3.006 1.153 1.336 1.605 1.707 1.83 1.876

"Belgium" "Percent of potential GDP" -2.112 -4.786 -4.257 -3.425 . . . .

"Czech Republic" "Percent change" 2.464 -4.287 1.675 2.629 3.5 3.5 3.5 3.5

"Czech Republic" "Percent of potential GDP" . . . . . . . .

"Denmark" "Percent change" -.87 -5.071 1.2 1.557 2.567 2.634 2.297 2.344

"Denmark" "Percent of potential GDP" 3.692 .038 -1.726 -1.494 . . . .

endCode:

reshape long v1_ v2_ , i(country units) j(year)

I tried to define

Code:

local varlist v1_ v2_ ... v_100

How can we reshape such large list of variables v1_, ..... v100_ ?

Stratified sample with probability proportional to size

We have a total of 908 communities (each community has a number of households) which are located in the sphere of influence of roads / highways (social inclusion and logistics corridors), they are divided into groups (intervention and control) and They belong to 2 strata: near and far (with respect to the capital of the community). A community should be selected with a probability proportional to the number of households in each community within each strata, each type of road / highway and group to which it belongs. What command in Stata could be used to make the community selection with PPS?

Combining Two Similar Variables To be in one row

Hi All,

I have the following 2 variables that serve the same purpose and I'm trying to generate one variable for both. Schoolfees1 has school values for ages 15 years to 19 years. Schoolfees2 is school values for ages 10 to 14 year olds. I would like to generate an overall fees variable called fees1 and would like the amount of school fees to show. The results shows as 0 or 1.

*My results show as follows

*Please note that gap are other ages that are not part of the equation. I'm only interested in ages 10 to 19 year old

input long(schoolfees1 schoolfees2) float fees1

. . .

. . .

. . .

. . .

. . .

. . .

. . .

. . .

. . .

7500 . 1

. . .

. . .

. . .

. . .

. . .

. . .

. . .

. . .

. . .

. . .

. . .

. . .

. . .

. . .

. . .

. . .

. . .

. . .

700 . 1

. . .

. . .

. . .

. . .

. . .

. . .

. . .

4000 . 1

. . .

. . .

. . .

. . .

. . .

. . .

. . .

. . .

. . .

250 . .

100 . 1

15 . 1

. . .

250 . 1

. . .

. . .

. . .

. . .

. . .

. . .

50 . 1

. . .

. . .

. . .

. . .

. . .

. . .

. . .

. . .

. . .

. . .

. . .

. . .

. . .

. . .

. . .

. . .

. . .

. . 1

. . .

. . .

. . .

650 . 1

. . .

. . .

. . .

. . .

. . 1

. . .

. . .

. . .

. . .

. . .

. . .

. . .

. . .

. . .

. . .

. . .

. . .

. . .

650 . 1

. . .

end

label values schoolfees1 w1_nonres

label values schoolfees2 w1_nonres

[/CODE]

Thanks

Nthato

I have the following 2 variables that serve the same purpose and I'm trying to generate one variable for both. Schoolfees1 has school values for ages 15 years to 19 years. Schoolfees2 is school values for ages 10 to 14 year olds. I would like to generate an overall fees variable called fees1 and would like the amount of school fees to show. The results shows as 0 or 1.

Code:

gen fees1 = schoolfees1 | schoolfees2 if age1>9 & age1<20

*Please note that gap are other ages that are not part of the equation. I'm only interested in ages 10 to 19 year old

input long(schoolfees1 schoolfees2) float fees1

. . .

. . .

. . .

. . .

. . .

. . .

. . .

. . .

. . .

7500 . 1

. . .

. . .

. . .

. . .

. . .

. . .

. . .

. . .

. . .

. . .

. . .

. . .

. . .

. . .

. . .

. . .

. . .

. . .

700 . 1

. . .

. . .

. . .

. . .

. . .

. . .

. . .

4000 . 1

. . .

. . .

. . .

. . .

. . .

. . .

. . .

. . .

. . .

250 . .

100 . 1

15 . 1

. . .

250 . 1

. . .

. . .

. . .

. . .

. . .

. . .

50 . 1

. . .

. . .

. . .

. . .

. . .

. . .

. . .

. . .

. . .

. . .

. . .

. . .

. . .

. . .

. . .

. . .

. . .

. . 1

. . .

. . .

. . .

650 . 1

. . .

. . .

. . .

. . .

. . 1

. . .

. . .

. . .

. . .

. . .

. . .

. . .

. . .

. . .

. . .

. . .

. . .

. . .

650 . 1

. . .

end

label values schoolfees1 w1_nonres

label values schoolfees2 w1_nonres

[/CODE]

Thanks

Nthato

quarterly returns

Hi

To calculate quarterly index returns from monthly index returns starting from the first month of the quarter to the third month of the quarter, I do:

I want now to calculate quarterly index returns starting from one month prior to the quarter-end until two months after the quarter-end. How can I adjust my code to achieve this?

An example of my data is here:

Thanks

To calculate quarterly index returns from monthly index returns starting from the first month of the quarter to the third month of the quarter, I do:

Code:

gen log_monthly_factor=log(1+vwretd) by fqdate, sort: egen quarterly_vwretd=total(log_monthly_factor) replace quarterly_vwretd=(exp(quarterly_vwretd)-1)

An example of my data is here:

Code:

* Example generated by -dataex-. To install: ssc install dataex clear input long DATE double vwretd float(year fqdate) 28 -.06624392 1960 0 59 .01441908 1960 0 90 -.01282217 1960 0 119 -.01527067 1960 1 151 .03409799 1960 1 181 .0228328 1960 1 210 -.02270468 1960 2 243 .03221498 1960 2 273 -.058673340000000004 1960 2 304 -.004704664 1960 3 334 .0486173 1960 3 364 .04853724 1960 3 396 .0639524 1961 4 424 .03700465 1961 4 454 .030609920000000002 1961 4 483 .005644733000000001 1961 5 516 .02589407 1961 5 546 -.02849906 1961 5 577 .02995465 1961 6 608 .026854410000000002 1961 6 637 -.01999036 1961 6 669 .027331130000000002 1961 7 699 .04545113 1961 7 728 .0007129512 1961 7 761 -.036146990000000004 1962 8 789 .01951236 1962 8 end format %td DATE format %tq fqdate

The "replace" option to the table command in Stata 16 is on longer available in Stata17; is there an alternative in Stata 17?

In Stata 16 you could code:

table ..., replace

to replace the data in memory with the results produced by the table command.

Is there an alternative in Stata 17, or a way to convert results from a collection to data?

Regards Kim

table ..., replace

to replace the data in memory with the results produced by the table command.

Is there an alternative in Stata 17, or a way to convert results from a collection to data?

Regards Kim

Kleibergen Paap F statistic test in xtdpdgmm

Hi everyone, I'm currently working with xtdpdgmm for my thesis but I'm confused how to get Kleibergen Paap F statistic test for weak instrument, can someone help me?

Here 's my code :

xtdpdgmm ROA l.DebtTA bi_3thn_out gsales size c.size#c.size c.l.DebtTA#c.bi_3thn_out if tin(2009,2019), model(diff) gmm(bi_3thn_out, lag(2 4) m(level)) gmm(ndtax tan, lag(2 2) diff m(diff)) two vce(r) nofootnote

Thankyou in advance!

Here 's my code :

xtdpdgmm ROA l.DebtTA bi_3thn_out gsales size c.size#c.size c.l.DebtTA#c.bi_3thn_out if tin(2009,2019), model(diff) gmm(bi_3thn_out, lag(2 4) m(level)) gmm(ndtax tan, lag(2 2) diff m(diff)) two vce(r) nofootnote

Thankyou in advance!

how to do instrument strength test for non-i.i.d error (more than one endogenous variables)

Dear all,

I wish to test instrument strength for the non-i.i.d error, and I have 3 endogenous variables. I noticed that the STATA command weakivtest by Montiel Olea and Pflueger (2013) is robust to non-i.i.d error, but it is restricted to one endogenous variable. Is there any code or literature suggested regarding multiple endogenous variables?

Great thanks,

Haiyan

I wish to test instrument strength for the non-i.i.d error, and I have 3 endogenous variables. I noticed that the STATA command weakivtest by Montiel Olea and Pflueger (2013) is robust to non-i.i.d error, but it is restricted to one endogenous variable. Is there any code or literature suggested regarding multiple endogenous variables?

Great thanks,

Haiyan

How do I create a new variable which counts number of variables that satisfy a given condition?

Hallo Statalist,

I am new here. I have a dataset of different towns with monthly temperatures over a 10-year period (i.e. 120 months). The dataset has just over 16,000 towns. I want a new variable which gives me the number of times each town recorded temperature exceeding a given threshold, say 15 degrees. Is there a simple was to do this without reshaping the data? Here is the sample data for the first 5 towns over the first 5 months

Thanks you!

I am new here. I have a dataset of different towns with monthly temperatures over a 10-year period (i.e. 120 months). The dataset has just over 16,000 towns. I want a new variable which gives me the number of times each town recorded temperature exceeding a given threshold, say 15 degrees. Is there a simple was to do this without reshaping the data? Here is the sample data for the first 5 towns over the first 5 months

Code:

+------------------------------------------------------+

| town_id month1 month2 month3 month4 month5 |

|------------------------------------------------------|

1. | 1 17 10 28 4 6 |

2. | 2 14 29 15 20 16 |

3. | 3 26 7 4 5 7 |

4. | 4 25 6 29 13 10 |

5. | 5 6 17 7 5 24 |

+------------------------------------------------------+create v3 which shows number of v1 that share the same v2 value

Hi,

My dataset has 2 variables that I am interested in seeing the relationship between.

v1 is 'unique_attend' which creates a unique code for each attendance (regardless of how many occur on the same day, or years apart etc.)

v2 is 'ID' which creates a unique ID number that is assigned to an individual.

Thus if there are 5 'unique_attend' cases with the same 'ID', that person has attended 5 times.

I want to create a third variable 'num_attend' which would show how many attendances are associated with each ID, but I can't for the life of me think of how to write that condition, and haven't been able to yield anything through searching.

Thanks in advance for the help,

Jane.

My dataset has 2 variables that I am interested in seeing the relationship between.

v1 is 'unique_attend' which creates a unique code for each attendance (regardless of how many occur on the same day, or years apart etc.)

v2 is 'ID' which creates a unique ID number that is assigned to an individual.

Thus if there are 5 'unique_attend' cases with the same 'ID', that person has attended 5 times.

I want to create a third variable 'num_attend' which would show how many attendances are associated with each ID, but I can't for the life of me think of how to write that condition, and haven't been able to yield anything through searching.

Thanks in advance for the help,

Jane.

coefplot plotting results from categorical variable

Dear all,

I would like to use coefplot to compare the coefficients from different models and categorical variables. I am using different data, but I recreated a (nonsensical) example that shows my question using the auto-dataset:

My issue with the graph is that there are two groups (1.dummy_weight and 2.dummy_weight) and for each of them est_1 and est_2 are presented separately. Is there a way to have only est_1 and est_2 once on the y-axis, and then compare 1.dummy_weight and 2_dummy_weight next to it? So that there is not est_1 and est_2 in the legend at the bottom, but 1.dummy_weight and 2.dummy_weight (in blue and red)?

I am attaching a screenshot from the output and what I would like to have.

Thank you very much for your help!

All the best

Leon

Array

I would like to use coefplot to compare the coefficients from different models and categorical variables. I am using different data, but I recreated a (nonsensical) example that shows my question using the auto-dataset:

Code:

webuse auto, clear gen dummy_weight = 0 // I define a categorical variable for the weight of a car replace dummy_weight = 1 if weight > 2000 & weight <= 3000 replace dummy_weight = 2 if weight > 3000 eststo est_1: reg headroom i.dummy_weight if foreign == 0 // I estimate a model separate for domestic and foreign car makers eststo est_2: reg headroom i.dummy_weight if foreign == 1 coefplot est_*, keep(1.dummy_weight 2.dummy_weight) swapnames asequation // I compare the coefficents

I am attaching a screenshot from the output and what I would like to have.

Thank you very much for your help!

All the best

Leon

Array

Wednesday, September 29, 2021

Labels of restricted categories appearing in graph when using -by()-

I want to graph tobacco use for couples in different value groups. While the values variable has 12 categories, I only want to graph the first five, and I use -inrange()- to achieve that:

Array

However, when I add the line of code with -by()- (below), the restriction imposed by -inrange(values, 1, 5) no longer holds and the labels for all the categories appear in the graph. How can I limit this to the first five categories (G1 to G5) as shown in the first figure?

Note: "at3" is a dummy variable with values 0/1. (As an aside, how can I relabel the variable in -by()- as done with -over()-?).

Array

Stata v.15.1. Using panel data.

Code:

graph hbar lstbcn1 lstbcn2 if inrange(values, 1, 5) , nooutsides ///

bar(1, bfcolor(navy)) bar(2, bfcolor(maroon)) over(values, label(labsize(small)) ///

relabel(1 "G1" 2 "G2" 3 "G3" 4 "G4" 5 "G5")) ///

ti("", size(small)) ylabel(, labsize(small)) ytick(25(50)175, grid) ///

legend(region(lstyle(none)) order(1 "male" 2 "female") ///

rowgap(.1) colgap(1) size(small) color(none) region(fcolor(none))) name(c1, replace) ///

graphregion(margin(medthin) color(white) icolor(white)) plotregion(style(none) color(white) icolor(white))However, when I add the line of code with -by()- (below), the restriction imposed by -inrange(values, 1, 5) no longer holds and the labels for all the categories appear in the graph. How can I limit this to the first five categories (G1 to G5) as shown in the first figure?

Code:

by(at3, note("") graphregion(color(white))) ///Array

Code:

* Example generated by -dataex-. To install: ssc install dataex clear input int(lstbcn1 lstbcn2) byte(values at3) 50 60 1 0 100 50 2 0 140 200 3 0 8 25 4 1 20 4 5 1 10 60 2 1 50 110 7 0 75 100 1 0 50 35 2 0 8 25 3 1 20 4 5 1 125 100 3 0 80 25 4 0 140 60 5 0 40 20 5 1 60 40 6 0 6 2 5 1 8 25 3 1 20 4 2 1 100 100 1 0 7 5 2 1 35 2 3 1 7 5 4 1 35 2 4 1 60 30 2 1 6 2 5 1 100 100 5 0 7 5 5 1 35 2 5 1 150 100 6 0 140 28 7 0 15 10 6 1 14 8 7 1 30 160 7 1 70 70 4 0 50 10 4 0 25 35 3 1 60 30 3 0 250 140 2 0 100 140 2 0 50 80 2 1 end

What fixed effects I should add when using triple difference in firm level?

In my difference-in-difference setting (double diff), I examine the impact of anticorruption laws on firms' asset growth of all countries all over the world after the laws are implemented in each country.

I normally control for firm and industry * year fixed effects in this case following existing literature.

However, I reckon that the impacts of laws will be different between developed and developing countries. Therefore, I am thinking of using subsample tests. There are two ways of conducting a subsample test are 1) divide the whole sample into two subsamples and then run the main regression for all subsamples or (2) add the interaction for one subsample and see the difference by reading the interactive coefficients by running the regression.

1) divide the whole sample into two subsamples and then run the main regression for all subsamples or (2) add the interaction for one subsample and see the difference by reading the interactive coefficients by running the regression.

Regarding the method (2) in double diff, we call it diff-in-diff-in-diff or triple diff. And (2) is preferred compared to (1).

So, what I want to ask is, if in the main specification, I control for firm and industry * year fix effects, so what fixed effects I should control when I perform the triple diff to examine the additional impact of laws on developed countries?

I normally control for firm and industry * year fixed effects in this case following existing literature.

However, I reckon that the impacts of laws will be different between developed and developing countries. Therefore, I am thinking of using subsample tests. There are two ways of conducting a subsample test are

1) divide the whole sample into two subsamples and then run the main regression for all subsamples or (2) add the interaction for one subsample and see the difference by reading the interactive coefficients by running the regression.

1) divide the whole sample into two subsamples and then run the main regression for all subsamples or (2) add the interaction for one subsample and see the difference by reading the interactive coefficients by running the regression.Regarding the method (2) in double diff, we call it diff-in-diff-in-diff or triple diff. And (2) is preferred compared to (1).

So, what I want to ask is, if in the main specification, I control for firm and industry * year fix effects, so what fixed effects I should control when I perform the triple diff to examine the additional impact of laws on developed countries?

Square CSR: a brief overview

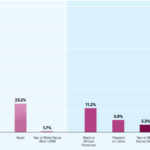

Square CSR programs and initiatives mainly focus on four key priority areas – Climate Action, Social Impact, Employees and Culture, and Corporate Governance. These initiatives are led by Neil Jorgensen, Global Environmental, Social, and Governance Lead at Square. Square Supporting Local Communities Square has invested USD 100 million in minority and underserved communities The financial unicorn assists some local communities in the US to preserve their local culture and deal with other most pressing issues. In many offices there are active volunteer communities, referred to volunteams that undertake various projects to support local communities Square and Gender Equality and Minorities Square invests in employee resource groups such as Black Squares Association, LatinX Community, LGBTQ group, and others in order to create an inclusive environment The majority 54,1% of US employees of The fintech are white people, as illustrated in Figure 1 below: Figure 1 Race and ethnicity of Square Inc. employees in US[1] Energy Consumption by Square Square launched Bitcoin Clean Energy Investment Initiative, USD 10 million investment to help accelerate renewable energy adoption in bitcoin mining. The payments company is planning to evaluate and explore clean energy options, working closely with its key provider partners. Carbon Emissions by Square In 2020 the total carbon footprint for Square totalled to 247,900 tCO2e. The Figure 2 below illustrates the share of carbon emissions by operations: Figure 2 Square Inc. total carbon emissions in 2020[2] The financial services and digital payments company has aimed to be zero carbon for operations by 2030. Other CSR Initiatives and Charitable Donations by Square Leads Program offered to managers at all levels is designed to develop leadership skills and competencies The finance sector disruptor fully pays parental leave globally, caregiving leave to US employees In UK Square partnered with The Entrepreneurial…

Square CSR programs and initiatives mainly focus on four key priority areas – Climate Action, Social Impact, Employees and Culture, and Corporate Governance. These initiatives are led by Neil Jorgensen, Global Environmental, Social, and Governance Lead at Square. Square Supporting Local Communities Square has invested USD 100 million in minority and underserved communities The financial unicorn assists some local communities in the US to preserve their local culture and deal with other most pressing issues. In many offices there are active volunteer communities, referred to volunteams that undertake various projects to support local communities Square and Gender Equality and Minorities Square invests in employee resource groups such as Black Squares Association, LatinX Community, LGBTQ group, and others in order to create an inclusive environment The majority 54,1% of US employees of The fintech are white people, as illustrated in Figure 1 below: Figure 1 Race and ethnicity of Square Inc. employees in US[1] Energy Consumption by Square Square launched Bitcoin Clean Energy Investment Initiative, USD 10 million investment to help accelerate renewable energy adoption in bitcoin mining. The payments company is planning to evaluate and explore clean energy options, working closely with its key provider partners. Carbon Emissions by Square In 2020 the total carbon footprint for Square totalled to 247,900 tCO2e. The Figure 2 below illustrates the share of carbon emissions by operations: Figure 2 Square Inc. total carbon emissions in 2020[2] The financial services and digital payments company has aimed to be zero carbon for operations by 2030. Other CSR Initiatives and Charitable Donations by Square Leads Program offered to managers at all levels is designed to develop leadership skills and competencies The finance sector disruptor fully pays parental leave globally, caregiving leave to US employees In UK Square partnered with The Entrepreneurial…Is there any package for stacked event-by-event estimates ?

I am reading a paper of Cengiz, 2019 about using stacked event-by-event estimates for the Difference-in-Differences settings. This is a good estimator for examining the effect of law without any control unit.I am wondering if Stata has any package for this estimator.

Many thanks and warm regards.

Many thanks and warm regards.

What is the difference between "stack" and "pca" ?

From my understanding, it seems that pca and stack syntaxes are all about suppressing two or more variables (similar characteristics) to one variable. Is there any difference between these two?

Trying to open shapefile but Stata looks for .dbf file

Hello,

I am trying to open a shapefile under the name "pga.shp" that I downloaded from the US Geological Survey. I am using shp2dta in Stata/MP 16.0.

This is the line of code I am using

When I run this, I get the following error:

I am puzzled as to why Stata is looking for a .dbf file when the one it should open is a .shp file. I do not have the .dbf files by the way. Just .shp

Any help would be appreciated.

Michelle Escobar

I am trying to open a shapefile under the name "pga.shp" that I downloaded from the US Geological Survey. I am using shp2dta in Stata/MP 16.0.

This is the line of code I am using

Code:

shp2dta using pga.shp, data(pga_data) coor(pga_coordinates) genid(id)

Code:

file pga.dbf not found r(601);

Any help would be appreciated.

Michelle Escobar

2SLS: Interpretation of Results

Hi - I am working with panel data (US industrials, 2000-2019) and am researching the impact of firm geographic diversification (GSD) on firm performance (ROA.) Fixed effects repression suggests an inverted U relationship between GSD and ROA. The linear and quadratic relationship of GSD performance are both significant. As a robustness check, I used 2SLS to correct for endogeneity of the regressor GSD (linear and quadratic.) The post estimation tests suggested that underidentification, weak identification annd over identification were not a problem and the regressor did in fact have an endogeneity issue. However, the results of 2SLS suggest non-significant relationship between GSD and performance. I am wondering how to interpret this result. In case you have any suggestions, please do let me know. Thank you.

Code:

. xtivreg2 Ln_EBIT_ROA Ln_Revenue Ln_LTD_to_Sales Ln_Intangible_Assets CoAge wGDPpc wCPI wDCF w

> Expgr wGDPgr wCons Ln_PS_RD (l1.Ln_GSD l1.Ln_GSD_Sqd= l1.Ln_Indgrp_GSD_by_Year Ln_Int_exp Ln_

> Int_exp_Sqd l1.Ln_ROS) if CoAge>=0 & NATION=="UNITED STATES" & NATIONCODE==840 & FSTS>=10 & G

> ENERALINDUSTRYCLASSIFICATION ==1 & Year_<2020 & Year_<YearInactive & Discr_GS_Rev!=1, fe endo

> g (l1.Ln_GSD)

Warning - singleton groups detected. 35 observation(s) not used.

FIXED EFFECTS ESTIMATION

------------------------

Number of groups = 141 Obs per group: min = 2

avg = 5.7

max = 17

IV (2SLS) estimation

--------------------

Estimates efficient for homoskedasticity only

Statistics consistent for homoskedasticity only

Number of obs = 798

F( 13, 644) = 4.41

Prob > F = 0.0000

Total (centered) SS = 203.9428465 Centered R2 = -0.0686

Total (uncentered) SS = 203.9428465 Uncentered R2 = -0.0686

Residual SS = 217.9269693 Root MSE = .5759

--------------------------------------------------------------------------------------

Ln_EBIT_ROA | Coef. Std. Err. z P>|z| [95% Conf. Interval]

---------------------+----------------------------------------------------------------

Ln_GSD |

L1. | -.7524867 .4739275 -1.59 0.112 -1.681367 .1763941

|

Ln_GSD_Sqd |

L1. | .108648 .1192194 0.91 0.362 -.1250177 .3423138

|

Ln_Revenue | .5130579 .1374392 3.73 0.000 .243682 .7824338

Ln_LTD_to_Sales | -.1517859 .0352142 -4.31 0.000 -.2208044 -.0827674

Ln_Intangible_Assets | -.0811832 .04624 -1.76 0.079 -.1718119 .0094456

CoAge | -.0249093 .0143349 -1.74 0.082 -.0530053 .0031866

wGDPpc | .0000639 .0000309 2.07 0.039 3.33e-06 .0001244

wCPI | -.0016804 .0280792 -0.06 0.952 -.0567146 .0533538

wDCF | -2.80e-15 1.67e-13 -0.02 0.987 -3.29e-13 3.24e-13

wExpgr | .0132025 .0114394 1.15 0.248 -.0092182 .0356232

wGDPgr | -.0291117 .0338202 -0.86 0.389 -.0953981 .0371747

wCons | 2.88e-14 6.23e-14 0.46 0.644 -9.32e-14 1.51e-13

Ln_PS_RD | -.0570622 .0723795 -0.79 0.430 -.1989234 .084799

--------------------------------------------------------------------------------------

Underidentification test (Anderson canon. corr. LM statistic): 45.779

Chi-sq(3) P-val = 0.0000

------------------------------------------------------------------------------

Weak identification test (Cragg-Donald Wald F statistic): 12.021

Stock-Yogo weak ID test critical values: 5% maximal IV relative bias 11.04

10% maximal IV relative bias 7.56

20% maximal IV relative bias 5.57

30% maximal IV relative bias 4.73

10% maximal IV size 16.87

15% maximal IV size 9.93

20% maximal IV size 7.54

25% maximal IV size 6.28

Source: Stock-Yogo (2005). Reproduced by permission.

------------------------------------------------------------------------------

Sargan statistic (overidentification test of all instruments): 2.186

Chi-sq(2) P-val = 0.3352

-endog- option:

Endogeneity test of endogenous regressors: 8.598

Chi-sq(1) P-val = 0.0034

Regressors tested: L.Ln_GSD

------------------------------------------------------------------------------

Instrumented: L.Ln_GSD L.Ln_GSD_Sqd

Included instruments: Ln_Revenue Ln_LTD_to_Sales Ln_Intangible_Assets CoAge

wGDPpc wCPI wDCF wExpgr wGDPgr wCons Ln_PS_RD

Excluded instruments: L.Ln_Indgrp_GSD_by_Year Ln_Int_exp Ln_Int_exp_Sqd

L.Ln_ROS

------------------------------------------------------------------------------How to list all unique values based on value of another variable?

Hi all, I am conducting a cross-country analysis.

I have a variable named developed has the value of 1 if this country is a developed country and 0 otherwise. Now I want to list all developed countries (variable's name is country) where developed=1, can I ask what I should do then?

Many thanks and warm regards.

I have a variable named developed has the value of 1 if this country is a developed country and 0 otherwise. Now I want to list all developed countries (variable's name is country) where developed=1, can I ask what I should do then?

Many thanks and warm regards.

Square Ecosystem: a brief overview

Square ecosystem represents a growing ecosystem of financial services. Ecosystem of Square can be divided into two groups: seller ecosystem and cash ecosystem. Square seller ecosystem is a cohesive commerce ecosystem that helps its sellers start, run, and grow their businesses. As illustrated in Figure 1 below, the core services for sellers are managed payments and points of sales products and services. Specific functionalities that contribute to these services include customer engagement platform, payroll invoices services, functionality to make appointments and capital management tools. Moreover, e-commerce and developer platforms, as well as, virtual terminal are important components of Square ecosystem on the Seller front. Figure 1 Square Seller products and services Square cash ecosystem, represented by Cash App comprises a wide range of financial products and services that are designed to help individuals manage their money. These include a cash card, instant discounts tool Boost. The strongest feature of Cash App that has no analogue in the market is the functionality to purchase Bitcoints and stocks in a simple, secure and hassle-free manner. Figure 2 Square Cash App functionalities The financial services and digital payments company is increasing the linkage between its seller and cash ecosystem in order to from strengthen its position in the market. For example, employers with seller account can pay their employees from seller revenues the next business day using Instant Payments feature when employees use Cash App. There is still room for growth of Square ecosystem on both fronts – Seller and Cash App. Apart from connecting the both ecosystems. The finance sector disruptor can also scale each of them, as well as, engage in optimised cross-selling. Moreover, the developer platform can play an instrumental role in expanding the ecosystem for Square, through exposing Application Program Interface (API) to third party developers to adjust it…

Square ecosystem represents a growing ecosystem of financial services. Ecosystem of Square can be divided into two groups: seller ecosystem and cash ecosystem. Square seller ecosystem is a cohesive commerce ecosystem that helps its sellers start, run, and grow their businesses. As illustrated in Figure 1 below, the core services for sellers are managed payments and points of sales products and services. Specific functionalities that contribute to these services include customer engagement platform, payroll invoices services, functionality to make appointments and capital management tools. Moreover, e-commerce and developer platforms, as well as, virtual terminal are important components of Square ecosystem on the Seller front. Figure 1 Square Seller products and services Square cash ecosystem, represented by Cash App comprises a wide range of financial products and services that are designed to help individuals manage their money. These include a cash card, instant discounts tool Boost. The strongest feature of Cash App that has no analogue in the market is the functionality to purchase Bitcoints and stocks in a simple, secure and hassle-free manner. Figure 2 Square Cash App functionalities The financial services and digital payments company is increasing the linkage between its seller and cash ecosystem in order to from strengthen its position in the market. For example, employers with seller account can pay their employees from seller revenues the next business day using Instant Payments feature when employees use Cash App. There is still room for growth of Square ecosystem on both fronts – Seller and Cash App. Apart from connecting the both ecosystems. The finance sector disruptor can also scale each of them, as well as, engage in optimised cross-selling. Moreover, the developer platform can play an instrumental role in expanding the ecosystem for Square, through exposing Application Program Interface (API) to third party developers to adjust it…Square McKinsey 7S Model

Square McKinsey 7S model is intended to illustrate how seven elements of business can be aligned to increase effectiveness. The framework divides business elements into two groups – hard and soft. Strategy, structure and systems are considered hard elements, whereas shared values, skills, style and staff are soft elements. According to Square McKinsey 7S model, there is a strong link between elements and a change in one element causes changes in others. Moreover, shared values are the most important of elements because they cause influence other elements to a great extent. McKinsey 7S model Hard Elements in Square McKinsey 7S Model Strategy Square business strategy is based on principles of simplifying financial transactions and developing an ecosystem of financial products and services. The financial services platform has two types of ecosystem – seller ecosystem and cash ecosystem. Square systematically expands both ecosystems with addition of new financial products and services that simplify processes and challenge the status quo. Structure Square organizational structure is highly dynamic, reflecting the rapid expansion of the range of financial products and services offered by the finance sector disruptor. Although it is difficult to frame Square organizational culture into any category due to the complexity of the business, it is closer to flat organizational structure compared to known alternatives. Specifically, co-founder and CEO Jack Dorsey has very little tolerance for bureaucracy and formality in business processes. Moreover, Dorsey believes in providing independence to teams and maintain the team sizes small. All of these are reflected in Square organizational structure. Systems Square Inc. business operations depend on a wide range of systems. These include employee recruitment and selection system, team development and orientation system, transaction processing systems and others. Moreover, customer relationship management system, business intelligence system and knowledge management system is also important…

Square McKinsey 7S model is intended to illustrate how seven elements of business can be aligned to increase effectiveness. The framework divides business elements into two groups – hard and soft. Strategy, structure and systems are considered hard elements, whereas shared values, skills, style and staff are soft elements. According to Square McKinsey 7S model, there is a strong link between elements and a change in one element causes changes in others. Moreover, shared values are the most important of elements because they cause influence other elements to a great extent. McKinsey 7S model Hard Elements in Square McKinsey 7S Model Strategy Square business strategy is based on principles of simplifying financial transactions and developing an ecosystem of financial products and services. The financial services platform has two types of ecosystem – seller ecosystem and cash ecosystem. Square systematically expands both ecosystems with addition of new financial products and services that simplify processes and challenge the status quo. Structure Square organizational structure is highly dynamic, reflecting the rapid expansion of the range of financial products and services offered by the finance sector disruptor. Although it is difficult to frame Square organizational culture into any category due to the complexity of the business, it is closer to flat organizational structure compared to known alternatives. Specifically, co-founder and CEO Jack Dorsey has very little tolerance for bureaucracy and formality in business processes. Moreover, Dorsey believes in providing independence to teams and maintain the team sizes small. All of these are reflected in Square organizational structure. Systems Square Inc. business operations depend on a wide range of systems. These include employee recruitment and selection system, team development and orientation system, transaction processing systems and others. Moreover, customer relationship management system, business intelligence system and knowledge management system is also important…Square Value Chain Analysis

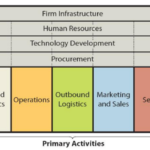

Value chain analysis is a strategic analytical tool that can be used to identify business activities that create the most value. The framework divides business activities into two categories – primary and support. The figure below illustrates the essence of Square value chain analysis. Square Value Chain Analysis Square Primary Activities Square Inbound logistics Inbound logistics refers to receiving and storing raw materials for their consequent usage in producing products and providing services. Along with a wide range of financial services, Square offers related hardware products such as card readers, stands, terminals, registers, hardware kits and accessories. The majority of hardware products are produced in China. Accordingly, cost-effectiveness of producing hardware products is the main source of value creation in inbound logistics for Square. Square Operations Square operations are divided into the following two segments: 1. Seller ecosystem. An expanding ecosystem of products and services that are designed to assist sellers to start, run and grow their businesses. In 2019 this segment processed USD106.2 billion of Gross Payment Volume (GPV), which was generated by nearly 2.3 billion card payments from 407 million payment cards.[1] 2. Cash ecosystem. This segment centred on Cash App integrates financial products and services that help individuals manage their money. As of December 2019, Cash App had approximately 24 million monthly active customers who had at least one transaction in any given month.[2] The main source of value creation in operations primary activity for Square refers to high level of speed and convenience of products and services enabled by technology. The finance sector disruptor spotted demand in both segments and developed products and services to satisfy demand with the use of the latest technology. Square Outbound Logistics Outbound logistics involve warehousing and distribution of products. Square uses the following distribution channels to distribute…

Value chain analysis is a strategic analytical tool that can be used to identify business activities that create the most value. The framework divides business activities into two categories – primary and support. The figure below illustrates the essence of Square value chain analysis. Square Value Chain Analysis Square Primary Activities Square Inbound logistics Inbound logistics refers to receiving and storing raw materials for their consequent usage in producing products and providing services. Along with a wide range of financial services, Square offers related hardware products such as card readers, stands, terminals, registers, hardware kits and accessories. The majority of hardware products are produced in China. Accordingly, cost-effectiveness of producing hardware products is the main source of value creation in inbound logistics for Square. Square Operations Square operations are divided into the following two segments: 1. Seller ecosystem. An expanding ecosystem of products and services that are designed to assist sellers to start, run and grow their businesses. In 2019 this segment processed USD106.2 billion of Gross Payment Volume (GPV), which was generated by nearly 2.3 billion card payments from 407 million payment cards.[1] 2. Cash ecosystem. This segment centred on Cash App integrates financial products and services that help individuals manage their money. As of December 2019, Cash App had approximately 24 million monthly active customers who had at least one transaction in any given month.[2] The main source of value creation in operations primary activity for Square refers to high level of speed and convenience of products and services enabled by technology. The finance sector disruptor spotted demand in both segments and developed products and services to satisfy demand with the use of the latest technology. Square Outbound Logistics Outbound logistics involve warehousing and distribution of products. Square uses the following distribution channels to distribute…What is the ideal way to keep only needed variables in panal dataset?

I have around 40 variables in my dataset but I actually only use around 8 of them. I assume that keeping 8 variables is better than keeping all these variables in my dataset, which may cost Stata more time to deal with the data. I am thinking of a good way to deal with that. Can I ask what you should do in this case? Do you create a new panel dataset and run the regression from that or else?

If we just keep some variables in the dataset, whether we can follow this post or is there any simpler way to do so?

Many thanks and warmest regards.

Stata 17, Windows 10.

If we just keep some variables in the dataset, whether we can follow this post or is there any simpler way to do so?

Many thanks and warmest regards.

Stata 17, Windows 10.

How to delete observation if one of these variables missing?

A common practice to reduce the size of the panel sample (to reduce the time that Stata process the data) is to delete the observation where one of the variables gets a missing value (Stata will ignore this observation anyway).

So, let's say I have a dataset of 40 variables, but I want to delete the observation where any of these variables contain missing: x1, x2, x5, x6

Can you tell me how to do that?

So, let's say I have a dataset of 40 variables, but I want to delete the observation where any of these variables contain missing: x1, x2, x5, x6

Can you tell me how to do that?

Confirmatory factor analysis

Hi! Just wonder in the computation of confirmatory factor analysis, what input is putting in the formula in STATA, correlation matrix or covariance matrix? I understand the outcome analysis is based on covariance matrix.

Regression with multiple dependent variables

Hi,

I am trying to run a set of logit models using the following formula, but it is not working. Can you please suggest what is not correct about this:

.

I am trying to run a set of logit models using the following formula, but it is not working. Can you please suggest what is not correct about this:

Code:

foreach x in diabetes asthma cancer {

logit `x' Age Sex, or

}

is not a valid command name

r(199);Comparing two string variables and extract difference into a new variable

Hi,

I am trying to tackle the problem of comparing text between two string variables and identify (and extract) “updated” parts.

I found some VBA script for Excel but only works for two cells (not automated to check two columns via loops). I don’t know how to modify VBA scripts. There is a STATA command for sequence analysis (based on Needleman-Wunsch) but I cannot figure out how it applies to comparing sentences. Anyone knows any other program or how the sequence analysis works for comparing sentences?

Thanks!

Xiaodong

I am trying to tackle the problem of comparing text between two string variables and identify (and extract) “updated” parts.

| String Var1 | String Var2 | Result new variable |

| “I wrote this in 2020” | “I wrote this in 2020. I updated this in 2021” | I updated this in 2021 |

| “someone said this” | “In 2020, someone said this” | In 2020, |

| “numbers reported in 2020” | “numbers changed in 2021” | changed 2021 |

Thanks!

Xiaodong

Looping to create marginsplots for different moderators but how to get the same y-axis?

Hi everybody

I am researching how outsourcing affects employees in terms of income, employment, and benefits and, next, how these effects are moderated by gender and education.

Now, I have made all my models and want to visualize them using margins. For the sake of brevity, I have used a loop:

However, I want to use the same y-axis for the analyses of the different outcomes so that, e.g., income has the same y-axis through the main analysis, gender analysis, and education analysis (e.g. 40.000, 42.500, 45.000, 47.500, 50.000).

Is there a way to do this in the regression loop or afterwards? What I do not want is to stop using the loop and do everything manually. I hope somebody has a suggestion.

Best,

Gustav

I am researching how outsourcing affects employees in terms of income, employment, and benefits and, next, how these effects are moderated by gender and education.

Now, I have made all my models and want to visualize them using margins. For the sake of brevity, I have used a loop:

Code:

*gender

foreach var of varlist income employment benefits {

xtreg `var' i.treatment##i.time##i.gender i.education covariates, fe cluster(id)

margins treatment, at(time=(1999 2000 2001 2002) gender = (0))

marginsplot, name(male_`var', replace) different options

margins treatment, at(time=(1999 2000 2001 2002) gender = (1))

marginsplot, name(female_`var', replace) different options

}

foreach var of varlist income employment benefits {

grc1leg male_`var' female_`var', ycommon name(gender_`var')

}

foreach var of varlist income employment benefits {

graph display gender_`var'

graph export gender_`var'.pdf, replace

}

**********************************************************

*education

foreach var of varlist income employment benefits {

xtreg `var' i.treatment##i.time##i.education i.gender covariates, fe cluster(id)

margins treatment, at(time=(1999 2000 2001 2002) education = (0))

marginsplot, name(edu0_`var', replace) different options

margins treatment, at(time=(1999 2000 2001 2002) education = (1))

marginsplot, name(edu1_`var', replace) different options

}

foreach var of varlist income employment benefits {

grc1leg edu0_`var' edu1_`var', ycommon name(edu_`var')

}

foreach var of varlist income employment benefits {

graph display edu_`var'

graph export edu_`var'.pdf, replace

}Is there a way to do this in the regression loop or afterwards? What I do not want is to stop using the loop and do everything manually. I hope somebody has a suggestion.

Best,

Gustav

Reference on manipulation of locals/macros/lists

I would like to learn more how to work with locals to store objects and then add, remove things, manipulate them according to criteria. I looked at the macro manipulation entry, but it's doesn't seem helpful. This was very helpful. https://acarril.github.io/posts/macro-lists and but it only discusses a couple of manipulations. Are there more comprehensive explainers?

cmmixlogit does not converge

Hi,

I am facing slight issues with the cmmixlogit command, which worries me a bit.

I am working with a discrete choice experiment, in which a recruiter (decision maker) chooses between two profiles. In total, one recruiter makes seven recruiting decisions, which allows to vary the attributes in the choice sets.I calculate individual clustered standard error due to the up to seven recruitment decisions per recruiter.

Previous to the cmmixlogit command, I used the mixlogit as follows below. It worked just fine.

mixlogit choice Int_x11_Var Int_x21_Var Int_x31_Var Int_x41_Var Int_x51_Var Int_x61_Var if ws == 1, rand(x11 x21 x31 x41 x51 x61) group(ID) id(LFD) nrep(300) cluster(LFD)

Now, I would like to use the cmmixlogit command, but it does not converge (message: not concave). I tried the following:

cmset LFD ID vig_alt

cmmixlogit choice Int_x11_Var Int_x21_Var Int_x31_Var Int_x41_Var Int_x51_Var Int_x61_Var if ws == 1, rand(x11 x21 x31 x41 x51 x61) vce(cluster LFD) noconstant

I appreciate any kind of help! In case you need more information or my explanation is not clear, please let me know.

Thanks in advance!

Best,

Luisa

I am facing slight issues with the cmmixlogit command, which worries me a bit.

I am working with a discrete choice experiment, in which a recruiter (decision maker) chooses between two profiles. In total, one recruiter makes seven recruiting decisions, which allows to vary the attributes in the choice sets.I calculate individual clustered standard error due to the up to seven recruitment decisions per recruiter.

Previous to the cmmixlogit command, I used the mixlogit as follows below. It worked just fine.

mixlogit choice Int_x11_Var Int_x21_Var Int_x31_Var Int_x41_Var Int_x51_Var Int_x61_Var if ws == 1, rand(x11 x21 x31 x41 x51 x61) group(ID) id(LFD) nrep(300) cluster(LFD)

Now, I would like to use the cmmixlogit command, but it does not converge (message: not concave). I tried the following:

cmset LFD ID vig_alt

cmmixlogit choice Int_x11_Var Int_x21_Var Int_x31_Var Int_x41_Var Int_x51_Var Int_x61_Var if ws == 1, rand(x11 x21 x31 x41 x51 x61) vce(cluster LFD) noconstant

I appreciate any kind of help! In case you need more information or my explanation is not clear, please let me know.

Thanks in advance!

Best,

Luisa

Surveys and lists.

Hi there, Can you please help me with how can I do this in STATA?

My data set:

Several waves of data from 60 countries. Around 3000 respondents from each country answered a question.

Four answer choices (1,2,3& 4)– respondents picked one.

For the analysis, I need to find the country-level percentage of respondents’ answers for each choice.

Many thanks!

My data set:

Several waves of data from 60 countries. Around 3000 respondents from each country answered a question.

Four answer choices (1,2,3& 4)– respondents picked one.

| Year | List of countries | Respondent ID | Answer |

| 1990/95 | Country1 Country 2 Country 60 |

||

| 1996/2000 | Country1 Country 2 Country 60 |

||

| 2000/2005 | Country1 Country 2 Country 60 |

||

| 2006/2010 | Country1 Country 2 Country 60 |

||

| 2011/2015 | Country1 Country 2 Country 60 |

| 2011/2015 | Country1 | % people answered 1 % people answered 2 % people answered 3 % people answered 4 |

problem in dummy approach-interaction terms

Dear

I use the panel regressions using augmented mean group (AMG) method, I add the dummy variables and interact it with my independent variables but the analysis give me insignificant results in all my dependent variables with the interaction terms ?? can you explain it for me why the results insignificant in statistical way???

I use the panel regressions using augmented mean group (AMG) method, I add the dummy variables and interact it with my independent variables but the analysis give me insignificant results in all my dependent variables with the interaction terms ?? can you explain it for me why the results insignificant in statistical way???

PHP Code:

xtmg dTotalRevenueProptionGDP indep1 indep2 indep3 inter_indep1 inter_indep2 inter_indep3 ldlnrer ldlnGDP , aug robust trend

Augmented Mean Group estimator (Bond & Eberhardt, 2009; Eberhardt & Teal, 2010)

Common dynamic process included as additional regressor

All coefficients represent averages across groups (group variable: CountryID)

Coefficient averages computed as outlier-robust means (using rreg)

Mean Group type estimation Number of obs = 792

AMG Wald chi2(8) = 35.05

Prob > chi2 = 0.0000

------------------------------------------------------------------------------

dTotalReve~P | Coefficient Std. err. z P>|z| [95% conf. interval]

-------------+----------------------------------------------------------------

indep1 | -.0028017 .0122874 -0.23 0.820 -.0268846 .0212812

indep2 | .0166705 .005728 2.91 0.004 .0054439 .0278971

indep3 | .056705 .0115252 4.92 0.000 .0341161 .0792939

inter_indep1 | -.0099015 .0279472 -0.35 0.723 -.064677 .0448739

inter_indep2 | .0102204 .0131027 0.78 0.435 -.0154604 .0359011

inter_indep3 | .0003112 .0104792 0.03 0.976 -.0202277 .02085

ldlnrer | .0183941 .0172272 1.07 0.286 -.0153705 .0521588

ldlnGDP | .0097457 .0145741 0.67 0.504 -.0188189 .0383104

c_d_p | .8662274 .1733312 5.00 0.000 .5265045 1.20595

trend | 1.81e-06 .0001344 0.01 0.989 -.0002615 .0002652

_cons | -.0101629 .0053661 -1.89 0.058 -.0206804 .0003545

------------------------------------------------------------------------------

Root Mean Squared Error (sigma): 0.0320

(RMSE uses residuals from group-specific regressions: unaffected by 'robust').

Variable c_d_p refers to the common dynamic process.

Variable trend refers to the group-specific linear trend terms.

Share of group-specific trends significant at 5% level: 0.000 (= 0 trends)

.

Select all variables based on suffix

I want to select all variables that contain a certain suffix to store them in a macro. Any suggestions?

MWE:

MWE:

Code:

sysuse auto.dta

ds make, not

foreach var of varlist `r(varlist)' {

gen `var'_minus_one = `var' - 1

}

* This does not work

global vars *_minus_oneTuesday, September 28, 2021

Using World Values Survey Wave 7 in Stata

Hello, I am getting started with using World Values Survey Wave 7 (WVS-7) in Stata. Is there anyone who has used WVS-7 in Stata ? I am interested in knowing how to set up Stata using *svyset* for multilevel modelling of WVS-7, with individuals at level 1 and countries at level 2.

Creating a variable with several condition using the foreach loop

Hello Everyone,

I am trying to create a variable ,"grad_AA", that will determine respondents' graduation year using the variable D_AA, which records respondents' graduation dates in month. The value of this variable is between 235 and 456 months. The year of graduation is determine using a interval. For example when D_AA is between [235; 240] the correspondent graduation year is 1999.

I have tried this following loop but it is giving me errors.

Can anyone help me figure out what is wrong?

foreach i of D_AA 235/456{

gen grad_AA `i'=1999 if `i'>=235 & <=240

replace grad_AA `i' = 2000 if `x' >=241 & <=252

replace grad_AA`i'=2001 if `i' >=253 & G4<=264

replace grad_AA`i'=2002 if `i' >=264 & <=276

replace grad_AA`i' =2003 if `i' >=277 & <=288

replace grad_AA`i' =2004 if `i' >=289 & <=300

replace grad_AA`i'=2005 if `i' >=301 & <=312

replace grad_AA`i' =2006 if `i' >=313 & <=324

replace grad_AA`i'=2007 if `i' >=325 & <=336

replace grad_AA`i' =2008 if `i' >=337 & <=348

replace grad_AA`i' = 2009 if `i' >=349 & <=360

replace grad_AA`i' =2010 if `i' >=361 & <=372

replace grad_AA `i' =2011 if `i' >=372 & <=384

replace grad_AA`i' =2012 if `i' >=385 & <=396

replace grad_AA`i' =2013 if `i' >=397 & <=408

replace grad_AA`i' =2014 if `i' >=409 & <=420

replace grad_AA`i' =2015 if `i' >=421 & <=432

replace grad_AA `i' =2016 if `i' >=433 & <=444

replace grad_AA`i' =2017 if `i' >=445 & <=456)

replace grad_AA`i' =2018 if `i' >=457 & <=468)

replace Grad_AA `i'=. if `i'=.

}

Thank you so much for your help.

I am trying to create a variable ,"grad_AA", that will determine respondents' graduation year using the variable D_AA, which records respondents' graduation dates in month. The value of this variable is between 235 and 456 months. The year of graduation is determine using a interval. For example when D_AA is between [235; 240] the correspondent graduation year is 1999.

I have tried this following loop but it is giving me errors.

Can anyone help me figure out what is wrong?

foreach i of D_AA 235/456{

gen grad_AA `i'=1999 if `i'>=235 & <=240

replace grad_AA `i' = 2000 if `x' >=241 & <=252

replace grad_AA`i'=2001 if `i' >=253 & G4<=264

replace grad_AA`i'=2002 if `i' >=264 & <=276

replace grad_AA`i' =2003 if `i' >=277 & <=288

replace grad_AA`i' =2004 if `i' >=289 & <=300

replace grad_AA`i'=2005 if `i' >=301 & <=312

replace grad_AA`i' =2006 if `i' >=313 & <=324

replace grad_AA`i'=2007 if `i' >=325 & <=336

replace grad_AA`i' =2008 if `i' >=337 & <=348

replace grad_AA`i' = 2009 if `i' >=349 & <=360

replace grad_AA`i' =2010 if `i' >=361 & <=372

replace grad_AA `i' =2011 if `i' >=372 & <=384

replace grad_AA`i' =2012 if `i' >=385 & <=396

replace grad_AA`i' =2013 if `i' >=397 & <=408

replace grad_AA`i' =2014 if `i' >=409 & <=420

replace grad_AA`i' =2015 if `i' >=421 & <=432

replace grad_AA `i' =2016 if `i' >=433 & <=444

replace grad_AA`i' =2017 if `i' >=445 & <=456)

replace grad_AA`i' =2018 if `i' >=457 & <=468)

replace Grad_AA `i'=. if `i'=.

}

Thank you so much for your help.

error using svy bootstrap with self-written program

Dear list,

I want to calculate bootstrapped standard error (and pvalue) of the difference between two correlation coefficients.

In my context, the two correlation coefficients are calculated on two separate samples. In other words, I am essentially comparing correlations between groups.

I plan to use rhsbsample to generate bootstrap replicate weights because I need to incorporate sampling weight in analysis.

Below is my code. Unfortunately, it does not work well. In the end, it alerts me that "last estimates not found". Can someone offer help? Thank you!

rhsbsample is written by Van Kerm's (2013) (available from the SSC archive).

Van Kerm, P. 2013. rhsbsample: Stata module for repeated half-sample bootstrap sampling. Statistical Software Components S457697, Department of Economics, Boston College. https://ideas.repec.org/c/boc/bocode/s457697.html.

Part of my code come from Jenkins, Stephen P. 2020. “Comparing Distributions of Ordinal Data.” The Stata Journal 20(3):505–31. doi: 10.1177/1536867X20953565, and a prior thread on Statalist.

I want to calculate bootstrapped standard error (and pvalue) of the difference between two correlation coefficients.

In my context, the two correlation coefficients are calculated on two separate samples. In other words, I am essentially comparing correlations between groups.

I plan to use rhsbsample to generate bootstrap replicate weights because I need to incorporate sampling weight in analysis.

Below is my code. Unfortunately, it does not work well. In the end, it alerts me that "last estimates not found". Can someone offer help? Thank you!

rhsbsample is written by Van Kerm's (2013) (available from the SSC archive).

Van Kerm, P. 2013. rhsbsample: Stata module for repeated half-sample bootstrap sampling. Statistical Software Components S457697, Department of Economics, Boston College. https://ideas.repec.org/c/boc/bocode/s457697.html.

Part of my code come from Jenkins, Stephen P. 2020. “Comparing Distributions of Ordinal Data.” The Stata Journal 20(3):505–31. doi: 10.1177/1536867X20953565, and a prior thread on Statalist.

Code:

webuse nlsw88,clear

rename ttl_exp pweight //create artificial sampling weight variable

capture prog drop corrdiff

program define corrdiff, rclass

corr wage hours if south==0

local def1=`r(rho)'

corr wage hours if south==1

local def2=`r(rho)'

return scalar diff = `def2' - `def1'

end

local R = 100

forvalues i = 1/`R' {

qui gen rhsbrw`i' = .

qui rhsbsample, weight(rhsbrw`i') strata(south)

qui replace rhsbrw`i' = rhsbrw`i' * pweight

}

svyset [pw = pweight], vce(bootstrap) bsrweight(rhsbrw*) mse

svy bootstrap diff = (r(diff)), dots: corrdiffSensitivity analysis following mediation with sureg

Greetings,

I'm running Stata 15.1 on a Mac OS and working with experimental data. I've been conducting tests of mediation with the 'sureg' command (why sureg? because I have a series of pre-treatment covariates, some of which are categorical, and sureg allows the use of prefix 'i' operators) to determine whether or to what extent the effects of an experimental treatment (a dummy variable) on a continuous outcome variable are conveyed via a 3rd continuous post-treatment variable. I'd like to run a sensitivity analysis to test how robust the indirect effects are to violations of the 'no unmeasured confounding of the M-Y relationship' assumption. Unfortunately, I'm not sure if this is possible after a 'sureg'. Does anyone know if it is or how I can go about it? If not possible with sureg, what are my options as far as sensitivity analysis goes?

If it helps, here is the code/program I've been using to run the sureg models + calculate bootstrap standard errors:

Here is also some sample data:

Thanks in advance for any help you can provide!

I'm running Stata 15.1 on a Mac OS and working with experimental data. I've been conducting tests of mediation with the 'sureg' command (why sureg? because I have a series of pre-treatment covariates, some of which are categorical, and sureg allows the use of prefix 'i' operators) to determine whether or to what extent the effects of an experimental treatment (a dummy variable) on a continuous outcome variable are conveyed via a 3rd continuous post-treatment variable. I'd like to run a sensitivity analysis to test how robust the indirect effects are to violations of the 'no unmeasured confounding of the M-Y relationship' assumption. Unfortunately, I'm not sure if this is possible after a 'sureg'. Does anyone know if it is or how I can go about it? If not possible with sureg, what are my options as far as sensitivity analysis goes?

If it helps, here is the code/program I've been using to run the sureg models + calculate bootstrap standard errors:

Code:

capture program drop bootbm program bootbm, rclass syntax [if] [in] sureg (mediatior treatment i.ideo7 i.party7 male age i.educ i.region4) (outcome treatment mediator i.ideo7 i.party7 male age i.educ i.region4) `if' `in' return scalar indirecteffect = [mediator]_b[treatment]*[outcome]_b[mediator] return scalar totaleffect= [outcome]_b[treatment]+[mediator]_b[treatment]*[outcome]_b[mediator] return scalar directeffect=[outcome]_b[treatment] end bootstrap r(indirecteffect) r(totaleffect) r(directeffect), reps(10000): bootbm

Code:

* Example generated by -dataex-. To install: ssc install dataex clear input float(outcome mediator) double treatment float age long ideo7 float party7 long(educ male region4) 1.0860398 .57275814 1 73 2 1 4 1 2 .4070499 .23108044 0 38 6 6 3 0 2 1.5952822 1.2561136 0 37 1 1 3 0 3 1.0860398 1.2561136 0 33 1 1 3 0 1 -1.1206771 -1.1356306 0 57 4 5 4 0 4 1.4255346 .914436 0 64 1 1 4 0 3 -1.2904246 .23108044 1 46 5 6 3 0 3 1.5952822 .06024148 0 78 2 1 4 1 3 1.4255346 .57275814 1 31 1 2 3 0 2 -1.460172 .4019194 1 31 3 2 1 0 4 -.6114347 -1.818986 0 40 2 2 3 1 1 -.27193987 .57275814 1 49 2 1 2 1 2 1.4255346 1.2561136 0 29 1 1 4 0 1 -1.460172 -.452275 0 25 1 1 3 1 4 .746545 .57275814 1 41 3 1 4 1 4 .2373026 .23108044 1 37 1 1 3 1 3 1.2557874 1.2561136 1 42 2 1 3 1 3 .4070499 .06024148 1 31 1 1 1 1 2 1.2557874 .914436 0 32 1 1 3 0 4 1.0860398 1.2561136 0 69 2 1 3 0 3 1.2557874 1.2561136 0 26 1 1 3 1 3 1.5952822 1.2561136 0 51 1 1 3 0 3 -1.1206771 -1.1356306 1 68 6 6 2 0 2 .5767974 .06024148 0 33 1 2 2 0 2 1.5952822 1.2561136 1 27 1 1 2 0 4 -.10219235 -.452275 1 38 2 1 2 0 3 .2373026 .57275814 0 50 2 1 4 0 3 .746545 1.2561136 1 70 3 3 1 0 3 .4070499 .7435971 0 35 2 3 3 1 1 -1.460172 -1.4773084 0 38 7 7 1 0 3 1.4255346 1.2561136 0 36 1 3 2 1 4 1.4255346 1.2561136 1 67 2 1 4 1 2 -.9509296 -1.1356306 0 47 6 6 3 1 4 1.0860398 1.2561136 1 45 2 1 2 0 2 -.27193987 -.11059724 1 53 6 7 2 0 4 1.2557874 1.2561136 0 37 2 2 1 1 3 .5767974 1.0852747 1 28 3 1 3 0 2 1.2557874 -.11059724 1 27 2 1 2 1 4 -1.2904246 -.11059724 0 64 1 1 3 1 1 -1.460172 -1.3064694 1 34 5 6 2 0 4 .06755506 .57275814 1 54 2 1 3 0 3 .06755506 .914436 0 44 3 3 2 1 4 -.4416873 .23108044 0 28 5 6 3 0 2 -.9509296 -.2814362 0 39 6 7 3 1 4 1.0860398 1.0852747 0 26 1 1 1 0 2 .5767974 .7435971 0 28 2 2 2 1 1 1.0860398 1.0852747 0 25 1 1 3 0 3 -.27193987 .23108044 0 42 1 1 2 1 3 1.0860398 1.2561136 0 33 2 2 4 0 2 .5767974 .23108044 1 56 3 3 4 1 1 -.4416873 -.9647917 0 22 3 1 3 0 1 .06755506 .7435971 0 49 2 3 2 1 4 1.2557874 1.0852747 1 23 1 1 2 0 3 .9162923 .914436 1 22 5 6 3 1 2 1.0860398 .7435971 1 36 1 1 3 1 2 -1.460172 -1.818986 1 30 6 7 3 0 1 -.9509296 -.7939528 0 23 2 2 4 1 1 .746545 1.2561136 1 60 3 1 1 0 2 1.4255346 1.0852747 0 41 2 2 2 1 3 -.6114347 -.7939528 1 36 3 1 2 0 3 1.4255346 .57275814 1 39 1 1 3 1 1 -.4416873 -.11059724 1 30 3 4 2 0 4 .5767974 .914436 0 26 3 2 4 1 1 -1.460172 -.7939528 1 60 3 4 3 1 3 1.2557874 .7435971 1 33 1 1 3 1 1 .5767974 1.2561136 0 24 1 3 1 1 2 .5767974 .06024148 1 57 2 1 4 0 2 .9162923 .914436 1 38 3 2 4 1 1 .9162923 .7435971 0 31 4 3 4 0 4 1.5952822 1.2561136 1 61 2 1 2 0 3 -1.460172 -1.818986 1 40 6 7 4 1 2 -1.460172 -.9647917 1 28 6 6 2 0 2 -.10219235 -1.3064694 1 39 2 2 4 0 3 .9162923 .4019194 1 69 1 1 2 0 3 -.4416873 .57275814 1 46 3 2 2 0 3 .9162923 1.2561136 0 33 1 1 3 0 1 .06755506 .23108044 0 35 4 2 4 1 3 -.7811822 .06024148 1 49 3 2 3 0 4 -.4416873 1.2561136 0 36 2 3 3 1 3 .06755506 .57275814 1 34 2 1 3 0 1 -.4416873 -.7939528 1 42 5 6 3 1 4 -.27193987 1.0852747 0 48 3 6 3 0 3 -.10219235 1.2561136 0 24 1 1 4 0 3 -.4416873 -.2814362 1 52 6 5 4 1 3 .746545 1.0852747 1 32 1 1 4 0 2 1.4255346 1.2561136 1 36 1 3 2 0 4 1.5952822 .7435971 0 33 2 2 2 1 4 -1.460172 -1.818986 0 40 7 7 3 0 2 -.27193987 .57275814 0 38 3 2 3 1 3 .5767974 1.2561136 1 24 3 2 2 0 3 .9162923 -.2814362 1 46 2 1 4 0 3 1.5952822 1.2561136 1 40 1 3 4 1 2 -.10219235 -.2814362 1 25 2 1 3 0 2 .2373026 -.452275 0 23 4 5 1 0 2 .5767974 .57275814 0 33 1 3 4 0 3 -.10219235 .23108044 0 29 1 1 3 1 3 1.0860398 .57275814 1 40 2 2 4 1 4 1.5952822 1.2561136 0 26 1 1 3 1 2 .06755506 .57275814 0 24 2 1 3 0 4 -.9509296 -.452275 0 35 3 2 2 0 3 end label values ideo7 ideo7 label def ideo7 1 "Conservative", modify label def ideo7 2 "Liberal", modify label def ideo7 3 "Moderate/Middle of the road", modify label def ideo7 4 "Slightly conservative", modify label def ideo7 5 "Slightly liberal", modify label def ideo7 6 "Very conservative", modify label def ideo7 7 "Very liberal", modify label values educ educ label def educ 1 "4 year/Bachelor’s degree", modify label def educ 2 "Doctoral or Professional degree", modify label def educ 3 "High school graduate", modify label def educ 4 "Less than high school graduate", modify

How to replace the value of a variable by the most frequently occured value

Hi,

I have a dataset looks like this:

I want to replace the value of the location by the most frequently happened one. For instance, for id 1, "A" appears 4 times, so I want to replace all the locations for id 1 to "A", and "E" to id 2.

I wonder if anyone knows how to realize this in stata.

Thank you !

I have a dataset looks like this:

Code:

* Example generated by -dataex-. To install: ssc install dataex clear input str2 id str8 location "1" "A" "1" "A" "1" "A" "1" "A" "1" "B" "1" "B" "1" "C" "2" "E" "2" "E" "2" "C" end

I wonder if anyone knows how to realize this in stata.

Thank you !

Find number of children, age at birth for women using identifiers

Hi:

I am working with a dataset that looks something like this:

HHID PID MID Rel_Head Age

10 1 3 1 56

10 2 . 2 48

10 3 . 7 75

10 4 . 8 80

10 5 2 6 18

10 6 2 6 16

10 7 3 5 52

10 8 3 5 49

12 1 . 1 25

12 2 . 2 24

where HHID is household identifier; PID is member identifier within each household; MID is the identifier for the mother; Rel_Head is the relationship to the head of the household (it's 1 if individual is head; 2 if they are spouse of head; 6 if child of head; 8 if father of head and so on).

For instance, in the above dataset, PID-5 and 6 are children of 1 and 2 in household 10.

I want to construct two variables from this dataset:

1. The number of children for each mother in the household;

2. The age at first birth for each mother. In other words, difference between her age and her oldest offspring's age.

Basically, I want to have two variables corresponding to the last two columns below:

HHID PID MID Rel_Head Age N_children Age_f_birth

10 1 3 1 56

10 2 . 2 48 2 30

10 3 . 7 75 2 19

10 4 . 8 80

10 5 2 6 18

10 6 2 6 16

10 7 3 5 52

10 8 3 5 49

12 1 . 1 25

12 2 . 2 24

Any help would be immensely appreciated! Thank you!

I am working with a dataset that looks something like this:

HHID PID MID Rel_Head Age

10 1 3 1 56

10 2 . 2 48

10 3 . 7 75

10 4 . 8 80

10 5 2 6 18

10 6 2 6 16

10 7 3 5 52

10 8 3 5 49

12 1 . 1 25

12 2 . 2 24

where HHID is household identifier; PID is member identifier within each household; MID is the identifier for the mother; Rel_Head is the relationship to the head of the household (it's 1 if individual is head; 2 if they are spouse of head; 6 if child of head; 8 if father of head and so on).

For instance, in the above dataset, PID-5 and 6 are children of 1 and 2 in household 10.

I want to construct two variables from this dataset:

1. The number of children for each mother in the household;

2. The age at first birth for each mother. In other words, difference between her age and her oldest offspring's age.

Basically, I want to have two variables corresponding to the last two columns below:

HHID PID MID Rel_Head Age N_children Age_f_birth

10 1 3 1 56

10 2 . 2 48 2 30

10 3 . 7 75 2 19

10 4 . 8 80

10 5 2 6 18

10 6 2 6 16

10 7 3 5 52

10 8 3 5 49

12 1 . 1 25

12 2 . 2 24

Any help would be immensely appreciated! Thank you!

Square Porter’s Five Forces Analysis

Porter’s Five Forces analytical framework developed by Michael Porter (1979)[1] represents five individual forces that shape an overall extent of competition in the industry. Square Porters Five Forces are illustrated in figure 1 below: Figure 1 Porter’s Five Forces Threat of new entrants in Square Porter’s Five Forces Analysis Threat of new entrants into the fintech sector is considerable. The following points affect the formation of the threat of new entrants into Square’s industry. 1. Time of entry. Banking and finance is already being disrupted globally and Square has been at the forefront of changes. Nowadays it has become evident that the old ways of conducting financial affairs where businesses had to wait for days, if not weeks to get their loan applications reviewed and employees have to wait for several days to get their cheques cashed will become history soon. Accordingly, some entrepreneurs, as well as, many established financial institutions are currently realizing their own projects to claim their piece of pie in the formation of the new global financial landscape. 2. Expected retaliation from current market players. While there are many entities interested in participating in the formation of new financial systems, any new market entrant will face retaliation from Square. It has to be noted that Square even stood up against deep-pocketed behemoth called Amazon. In 2014 Amazon copied Square’s main product, card reader, undercut its price by 30% and offered free two-day shipping and live customer support.[2] In response Square increased its focus on the quality of its products and customer services. By the end of 2015, Amazon had to discontinue its Register card reader. 3. Legal and regulatory barriers. Finance and banking is one of the most heavily regulated industries worldwide. There are consumer protection laws, anti-money laundering laws, broker-dealer regulations, virtual currency regulations,…